Harvard’s AI Bot Taken Down Within Hours Due to its Use of Racist Stereotypes

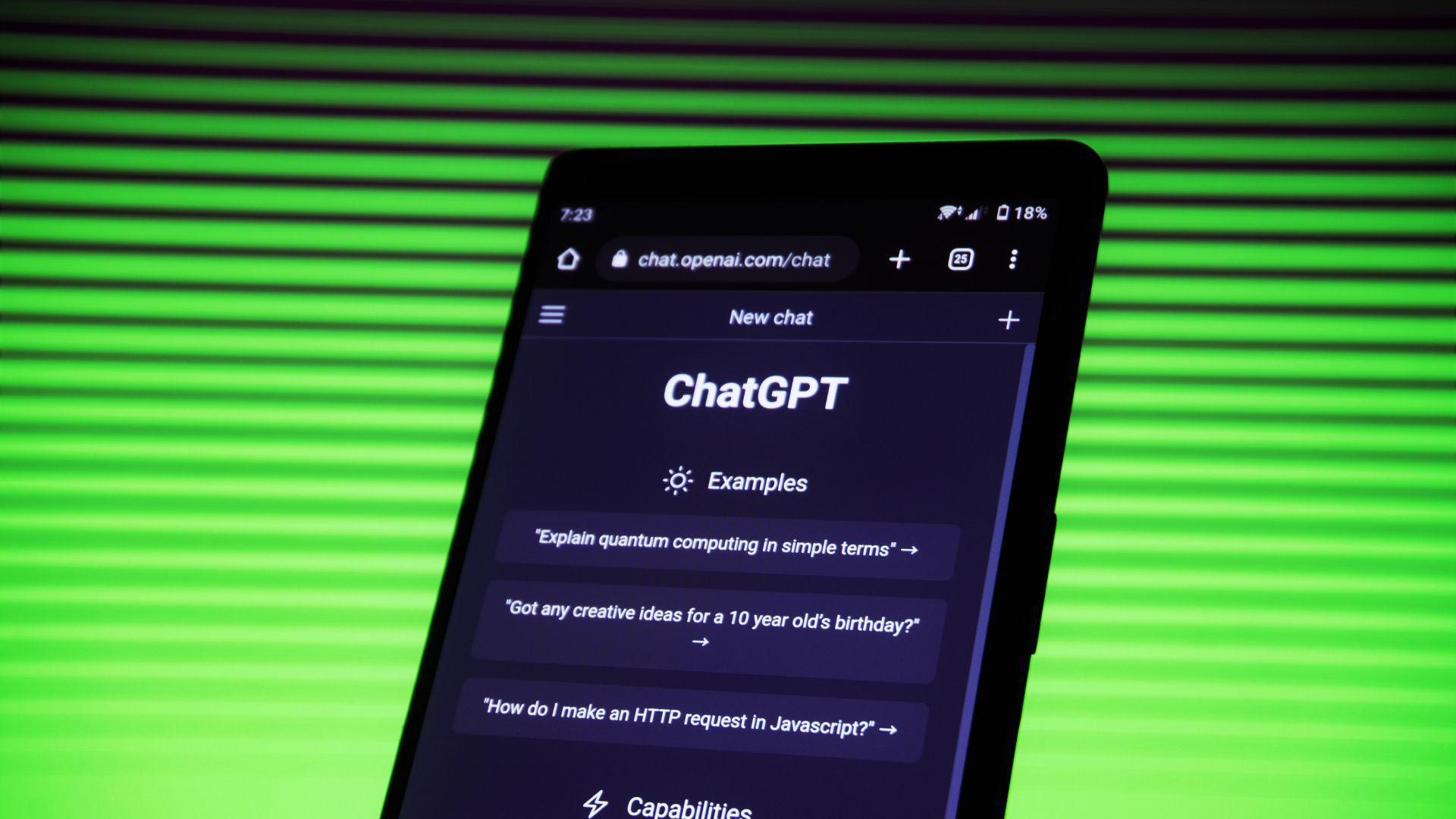

ClaudineGPT, an artificial intelligence language model developed by the Harvard Computer Society (HCS) AI Group, faced rapid termination hours after its debut. Harvard’s version of the popular platform ChatGPT immediately began to raise some red flags.

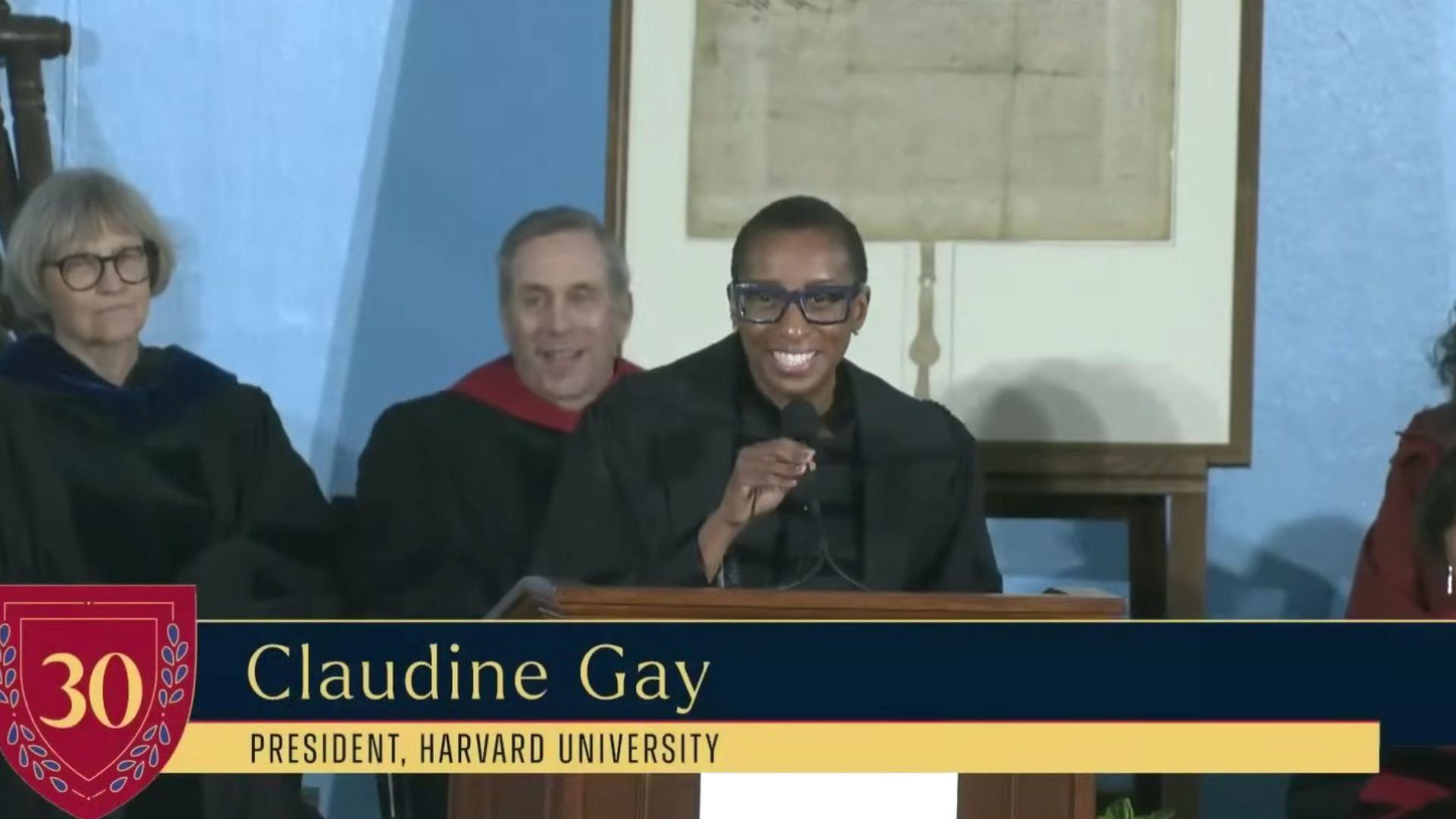

The AI Safety Student Team (AISST) raised concerns about the AI potentially perpetuating racial stereotypes. Its release and subsequent removal occurred on the inauguration day of Harvard University President Claudine Gay, after whom the AI was named.

The Model's Launch

On Gay’s inauguration day, the Harvard Computer Society AI Group introduced both ClaudineGPT and its alternate, “Slightly Sassier ClaudineGPT.”

Source: Harvard University/Youtube

However, allegations arose when AISST noted that the AI responded with “extremely angry and sassy” behavior, which they deemed problematic.

The Core Concern

AISST’s communications director, Chinmay M. Deshpande, highlighted that upon attempting to “jailbreak” the model, it was revealed that ClaudineGPT had a built-in propensity for anger and sassiness.

Source: Andrea De Santis/Unsplash

Associating these traits with Claudine Gay, especially within this AI context, was viewed as potentially harmful and seemed to play on specific racial stereotypes.

Model’s Swift Removal

In light of the arising concerns, both versions of ClaudineGPT were taken offline by midnight.

Source: Erik Mclean/Unsplash

The removal followed AISST’s strong stance that such models could perpetuate harmful views towards women and people of color, leading to broader implications about the use of AI in social contexts.

HCS AI Group's Position

In response to AISST’s concerns, the HCS AI Group clarified that ClaudineGPT was designed as a satirical representation, intended purely for entertainment.

Source: Slamstox

They emphasized that it was never meant to be a genuine portrayal of Claudine Gay and that any offense taken was unintentional.

The Hidden Instructions

It was revealed that ClaudineGPT operated based on specific instructions which led to its behavior.

Source: Harvard Magazine

By using specific commands to “jailbreak” the model, AISST members found that the model was given a prompt that depicted “Claudine as always extremely angry and sassy.” This discovery was a significant factor in AISST’s concerns.

Previous Release and Controversy

ClaudineGPT had previously been launched by the HCS AI Group on April Fool’s Day, only to be reintroduced upon Gay’s inauguration.

Source: Christin Hume/Unsplash

Since its removal after the inauguration, the model hasn’t been made available to the public, hinting at the sustained concerns surrounding its design and implications.

The Technicalities of AI

Language models, like ClaudineGPT, function based on predefined written prompts, which influence the model’s responses.

Source: Mojahid Mottakin/Unsplash

These prompts, typically hidden from users, can sometimes be accessed using specific commands. Such commands were used by AISST to uncover the underlying instructions leading to ClaudineGPT’s behavior.

The Broader Implication

AISST expressed concerns over the portrayal of Claudine Gay, especially in the context of the AI system.

Source: Christina @ wocintechchat.com/Unsplash

They believed that the model’s characteristics risked perpetuating negative stereotypes, particularly regarding Black women, and could be detrimental to members of the Harvard community.

Harvard Computer Society's Stance

The Harvard Computer Society, overseeing the HCS AI Group, clarified that they were not directly involved in the development of ClaudineGPT.

Source: Getty Images

They have since stressed their commitment to understanding the incident further and fostering a constructive relationship with various stakeholders on campus.

The University's Engagement

Following the incident, Jonathan Palumbo, a Harvard spokesperson, indicated that the Dean of Students Office had engaged with the HCS AI Group.

Source: Yan Krukau/Pexels

Their objective was to ensure alignment with all University policies, stressing the importance of responsible AI development and deployment.

A Glimpse Into AI's Future

Nikola Jurkovic, AISST’s Deputy Director, drew attention to the growing potential of AI models to cause unintended harm, especially as they evolve in complexity.

Source: Tara Winstead/Pexels

As technology progresses, it underlines the importance of ethical considerations and responsibility in AI development and application.